表題の通り Linux Kernel に付いた CVE-2018-5390 / SegmentSmack の PoC を書いて検証・観察をしていた

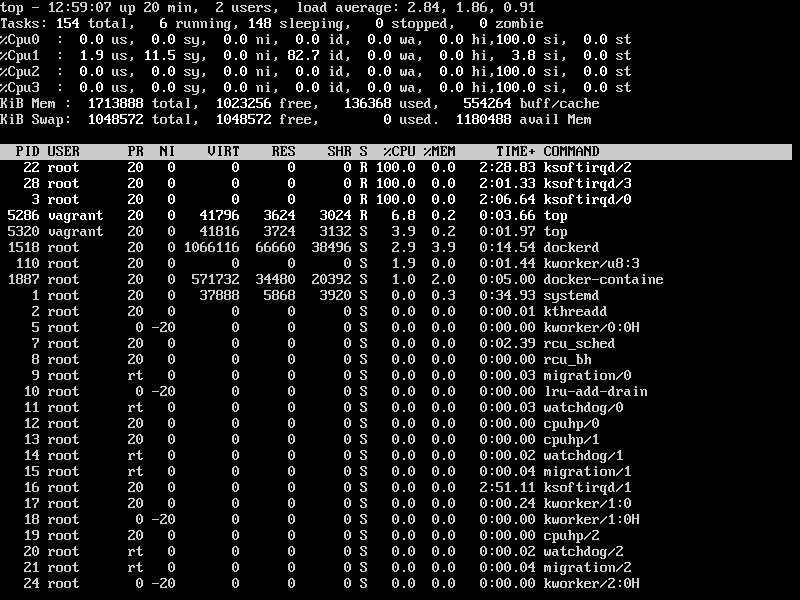

VirtualBox で CVE-2018-5390 SegmentSmack を再現できたぽい? ( si = software interrupts が 100% 近くで張り付く + tcp_collapse_ofo_queue() を呼び出してる ). ローカルで PoC 実行で、 PoC の CPU消費も混じってそうなので VM 2台にしてちゃんとやってみよう pic.twitter.com/jKsDbj7LIK

— ito hiroya (@hiboma) October 2, 2018

問題の再現は何とかできたが、 exploit = 悪用可能なレベルまでのコードを書くのが難しい ( 悪用厳禁 )

⚠️

2018年8月に SegmentSmack として騒ぎになった CVE です

CVE の説明

Linux kernel versions 4.9+ can be forced to make very expensive calls to tcp_collapse_ofo_queue() and tcp_prune_ofo_queue() for every incoming packet which can lead to a denial of service.

説明を読むと、以下の2つの関数が呼び出されるように TCPセグメントを細工して送れば DoS を引き起こせるのだと分かる

ofo_queue は Out Of Order Queue を指す (下記の解説が詳しくて非常に参考になりました。感謝++)

ターゲットとなるホストの Out Of Order Queue にキューイングするよう TCP セグメントを細工すればいいと分かる

RedHat の説明も読む

この CVE に書かれた欠陥を exploit して DoS 攻撃に用いた場合、攻撃を受けたホストでどのような影響がでるかは RedHat が詳しく書いている

- top で si = sofrware interruput が 100% で貼りついている

- CPU を占有しているのは ksoftirqd カーネルスレッド

- 論理 CPU数分の ksotirqd を DoS に陥れることができる

上記の状態を再現できる PoC を書くのが目標となる

Linux カーネルの修正パッチ

少し長いので略記するが、コミットメッセージとソースの diff はPoC を書く際の最重要なヒントになる

等々のヒントを得る

Before linux-4.9, we would have pruned all packets in ofo_queue in one go, every XXXX packets. XXXX depends on sk_rcvbuf and skbs truesize, but is about 7000 packets with tcp_rmem[2] default of 6 MB.

どのくらいのパケットを送ればいいかの目安がわかる

Calling tcp_collapse_ofo_queue() in this case is not useful, and offers a O(N2) surface attack to malicious peers.

tcp_collapse_ofo_queue が O(N2) で効率が悪いらしい

1) Do not attempt to collapse tiny skbs.

tiny skb = 小さな struct sk_buff で、TCP のペイロードに載せるデータは小さくてよいと分かる

パッチの詳細を見る

From 72cd43ba64fc172a443410ce01645895850844c8 Mon Sep 17 00:00:00 2001

From: Eric Dumazet <edumazet@google.com>

Date: Mon, 23 Jul 2018 09:28:17 -0700

Subject: tcp: free batches of packets in tcp_prune_ofo_queue()

Juha-Matti Tilli reported that malicious peers could inject tiny

packets in out_of_order_queue, forcing very expensive calls

to tcp_collapse_ofo_queue() and tcp_prune_ofo_queue() for

every incoming packet. out_of_order_queue rb-tree can contain

thousands of nodes, iterating over all of them is not nice.

Before linux-4.9, we would have pruned all packets in ofo_queue

in one go, every XXXX packets. XXXX depends on sk_rcvbuf and skbs

truesize, but is about 7000 packets with tcp_rmem[2] default of 6 MB.

Since we plan to increase tcp_rmem[2] in the future to cope with

modern BDP, can not revert to the old behavior, without great pain.

Strategy taken in this patch is to purge ~12.5 % of the queue capacity.

Fixes: 36a6503fedda ("tcp: refine tcp_prune_ofo_queue() to not drop all packets")

Signed-off-by: Eric Dumazet <edumazet@google.com>

Reported-by: Juha-Matti Tilli <juha-matti.tilli@iki.fi>

Acked-by: Yuchung Cheng <ycheng@google.com>

Acked-by: Soheil Hassas Yeganeh <soheil@google.com>

Signed-off-by: David S. Miller <davem@davemloft.net>

---

net/ipv4/tcp_input.c | 15 +++++++++++----

1 file changed, 11 insertions(+), 4 deletions(-)

diff --git a/net/ipv4/tcp_input.c b/net/ipv4/tcp_input.c

index 6bade06aaf72..64e45b279431 100644

--- a/net/ipv4/tcp_input.c

+++ b/net/ipv4/tcp_input.c

@@ -4942,6 +4942,7 @@ new_range:

* 2) not add too big latencies if thousands of packets sit there.

* (But if application shrinks SO_RCVBUF, we could still end up

* freeing whole queue here)

+ * 3) Drop at least 12.5 % of sk_rcvbuf to avoid malicious attacks.

*

* Return true if queue has shrunk.

*/

@@ -4949,20 +4950,26 @@ static bool tcp_prune_ofo_queue(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

struct rb_node *node, *prev;

+ int goal;

if (RB_EMPTY_ROOT(&tp->out_of_order_queue))

return false;

NET_INC_STATS(sock_net(sk), LINUX_MIB_OFOPRUNED);

+ goal = sk->sk_rcvbuf >> 3;

node = &tp->ooo_last_skb->rbnode;

do {

prev = rb_prev(node);

rb_erase(node, &tp->out_of_order_queue);

+ goal -= rb_to_skb(node)->truesize;

tcp_drop(sk, rb_to_skb(node));

- sk_mem_reclaim(sk);

- if (atomic_read(&sk->sk_rmem_alloc) <= sk->sk_rcvbuf &&

- !tcp_under_memory_pressure(sk))

- break;

+ if (!prev || goal <= 0) {

+ sk_mem_reclaim(sk);

+ if (atomic_read(&sk->sk_rmem_alloc) <= sk->sk_rcvbuf &&

+ !tcp_under_memory_pressure(sk))

+ break;

+ goal = sk->sk_rcvbuf >> 3;

+ }

node = prev;

} while (node);

tp->ooo_last_skb = rb_to_skb(prev);

--

cgit 1.2-0.3.lf.el7

From f4a3313d8e2ca9fd8d8f45e40a2903ba782607e7 Mon Sep 17 00:00:00 2001

From: Eric Dumazet <edumazet@google.com>

Date: Mon, 23 Jul 2018 09:28:18 -0700

Subject: tcp: avoid collapses in tcp_prune_queue() if possible

Right after a TCP flow is created, receiving tiny out of order

packets allways hit the condition :

if (atomic_read(&sk->sk_rmem_alloc) >= sk->sk_rcvbuf)

tcp_clamp_window(sk);

tcp_clamp_window() increases sk_rcvbuf to match sk_rmem_alloc

(guarded by tcp_rmem[2])

Calling tcp_collapse_ofo_queue() in this case is not useful,

and offers a O(N^2) surface attack to malicious peers.

Better not attempt anything before full queue capacity is reached,

forcing attacker to spend lots of resource and allow us to more

easily detect the abuse.

Signed-off-by: Eric Dumazet <edumazet@google.com>

Acked-by: Soheil Hassas Yeganeh <soheil@google.com>

Acked-by: Yuchung Cheng <ycheng@google.com>

Signed-off-by: David S. Miller <davem@davemloft.net>

---

net/ipv4/tcp_input.c | 3 +++

1 file changed, 3 insertions(+)

diff --git a/net/ipv4/tcp_input.c b/net/ipv4/tcp_input.c

index 64e45b279431..53289911362a 100644

--- a/net/ipv4/tcp_input.c

+++ b/net/ipv4/tcp_input.c

@@ -5004,6 +5004,9 @@ static int tcp_prune_queue(struct sock *sk)

else if (tcp_under_memory_pressure(sk))

tp->rcv_ssthresh = min(tp->rcv_ssthresh, 4U * tp->advmss);

+ if (atomic_read(&sk->sk_rmem_alloc) <= sk->sk_rcvbuf)

+ return 0;

+

tcp_collapse_ofo_queue(sk);

if (!skb_queue_empty(&sk->sk_receive_queue))

tcp_collapse(sk, &sk->sk_receive_queue, NULL,

--

cgit 1.2-0.3.lf.el7

From 3d4bf93ac12003f9b8e1e2de37fe27983deebdcf Mon Sep 17 00:00:00 2001

From: Eric Dumazet <edumazet@google.com>

Date: Mon, 23 Jul 2018 09:28:19 -0700

Subject: tcp: detect malicious patterns in tcp_collapse_ofo_queue()

In case an attacker feeds tiny packets completely out of order,

tcp_collapse_ofo_queue() might scan the whole rb-tree, performing

expensive copies, but not changing socket memory usage at all.

1) Do not attempt to collapse tiny skbs.

2) Add logic to exit early when too many tiny skbs are detected.

We prefer not doing aggressive collapsing (which copies packets)

for pathological flows, and revert to tcp_prune_ofo_queue() which

will be less expensive.

In the future, we might add the possibility of terminating flows

that are proven to be malicious.

Signed-off-by: Eric Dumazet <edumazet@google.com>

Acked-by: Soheil Hassas Yeganeh <soheil@google.com>

Signed-off-by: David S. Miller <davem@davemloft.net>

---

net/ipv4/tcp_input.c | 15 +++++++++++++--

1 file changed, 13 insertions(+), 2 deletions(-)

diff --git a/net/ipv4/tcp_input.c b/net/ipv4/tcp_input.c

index 53289911362a..78068b902e7b 100644

--- a/net/ipv4/tcp_input.c

+++ b/net/ipv4/tcp_input.c

@@ -4902,6 +4902,7 @@ end:

static void tcp_collapse_ofo_queue(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

+ u32 range_truesize, sum_tiny = 0;

struct sk_buff *skb, *head;

u32 start, end;

@@ -4913,6 +4914,7 @@ new_range:

}

start = TCP_SKB_CB(skb)->seq;

end = TCP_SKB_CB(skb)->end_seq;

+ range_truesize = skb->truesize;

for (head = skb;;) {

skb = skb_rb_next(skb);

@@ -4923,11 +4925,20 @@ new_range:

if (!skb ||

after(TCP_SKB_CB(skb)->seq, end) ||

before(TCP_SKB_CB(skb)->end_seq, start)) {

- tcp_collapse(sk, NULL, &tp->out_of_order_queue,

- head, skb, start, end);

+ /* Do not attempt collapsing tiny skbs */

+ if (range_truesize != head->truesize ||

+ end - start >= SKB_WITH_OVERHEAD(SK_MEM_QUANTUM)) {

+ tcp_collapse(sk, NULL, &tp->out_of_order_queue,

+ head, skb, start, end);

+ } else {

+ sum_tiny += range_truesize;

+ if (sum_tiny > sk->sk_rcvbuf >> 3)

+ return;

+ }

goto new_range;

}

+ range_truesize += skb->truesize;

if (unlikely(before(TCP_SKB_CB(skb)->seq, start)))

start = TCP_SKB_CB(skb)->seq;

if (after(TCP_SKB_CB(skb)->end_seq, end))

--

cgit 1.2-0.3.lf.el7

From 8541b21e781a22dce52a74fef0b9bed00404a1cd Mon Sep 17 00:00:00 2001

From: Eric Dumazet <edumazet@google.com>

Date: Mon, 23 Jul 2018 09:28:20 -0700

Subject: tcp: call tcp_drop() from tcp_data_queue_ofo()

In order to be able to give better diagnostics and detect

malicious traffic, we need to have better sk->sk_drops tracking.

Fixes: 9f5afeae5152 ("tcp: use an RB tree for ooo receive queue")

Signed-off-by: Eric Dumazet <edumazet@google.com>

Acked-by: Soheil Hassas Yeganeh <soheil@google.com>

Acked-by: Yuchung Cheng <ycheng@google.com>

Signed-off-by: David S. Miller <davem@davemloft.net>

---

net/ipv4/tcp_input.c | 4 ++--

1 file changed, 2 insertions(+), 2 deletions(-)

diff --git a/net/ipv4/tcp_input.c b/net/ipv4/tcp_input.c

index 78068b902e7b..b062a7692238 100644

--- a/net/ipv4/tcp_input.c

+++ b/net/ipv4/tcp_input.c

@@ -4510,7 +4510,7 @@ coalesce_done:

/* All the bits are present. Drop. */

NET_INC_STATS(sock_net(sk),

LINUX_MIB_TCPOFOMERGE);

- __kfree_skb(skb);

+ tcp_drop(sk, skb);

skb = NULL;

tcp_dsack_set(sk, seq, end_seq);

goto add_sack;

@@ -4529,7 +4529,7 @@ coalesce_done:

TCP_SKB_CB(skb1)->end_seq);

NET_INC_STATS(sock_net(sk),

LINUX_MIB_TCPOFOMERGE);

- __kfree_skb(skb1);

+ tcp_drop(sk, skb1);

goto merge_right;

}

} else if (tcp_try_coalesce(sk, skb1,

--

cgit 1.2-0.3.lf.el7

From 58152ecbbcc6a0ce7fddd5bf5f6ee535834ece0c Mon Sep 17 00:00:00 2001

From: Eric Dumazet <edumazet@google.com>

Date: Mon, 23 Jul 2018 09:28:21 -0700

Subject: tcp: add tcp_ooo_try_coalesce() helper

In case skb in out_or_order_queue is the result of

multiple skbs coalescing, we would like to get a proper gso_segs

counter tracking, so that future tcp_drop() can report an accurate

number.

I chose to not implement this tracking for skbs in receive queue,

since they are not dropped, unless socket is disconnected.

Signed-off-by: Eric Dumazet <edumazet@google.com>

Acked-by: Soheil Hassas Yeganeh <soheil@google.com>

Acked-by: Yuchung Cheng <ycheng@google.com>

Signed-off-by: David S. Miller <davem@davemloft.net>

---

net/ipv4/tcp_input.c | 25 +++++++++++++++++++++----

1 file changed, 21 insertions(+), 4 deletions(-)

diff --git a/net/ipv4/tcp_input.c b/net/ipv4/tcp_input.c

index b062a7692238..3bcd30a2ba06 100644

--- a/net/ipv4/tcp_input.c

+++ b/net/ipv4/tcp_input.c

@@ -4358,6 +4358,23 @@ static bool tcp_try_coalesce(struct sock *sk,

return true;

}

+static bool tcp_ooo_try_coalesce(struct sock *sk,

+ struct sk_buff *to,

+ struct sk_buff *from,

+ bool *fragstolen)

+{

+ bool res = tcp_try_coalesce(sk, to, from, fragstolen);

+

+ /* In case tcp_drop() is called later, update to->gso_segs */

+ if (res) {

+ u32 gso_segs = max_t(u16, 1, skb_shinfo(to)->gso_segs) +

+ max_t(u16, 1, skb_shinfo(from)->gso_segs);

+

+ skb_shinfo(to)->gso_segs = min_t(u32, gso_segs, 0xFFFF);

+ }

+ return res;

+}

+

static void tcp_drop(struct sock *sk, struct sk_buff *skb)

{

sk_drops_add(sk, skb);

@@ -4481,8 +4498,8 @@ static void tcp_data_queue_ofo(struct sock *sk, struct sk_buff *skb)

/* In the typical case, we are adding an skb to the end of the list.

* Use of ooo_last_skb avoids the O(Log(N)) rbtree lookup.

*/

- if (tcp_try_coalesce(sk, tp->ooo_last_skb,

- skb, &fragstolen)) {

+ if (tcp_ooo_try_coalesce(sk, tp->ooo_last_skb,

+ skb, &fragstolen)) {

coalesce_done:

tcp_grow_window(sk, skb);

kfree_skb_partial(skb, fragstolen);

@@ -4532,8 +4549,8 @@ coalesce_done:

tcp_drop(sk, skb1);

goto merge_right;

}

- } else if (tcp_try_coalesce(sk, skb1,

- skb, &fragstolen)) {

+ } else if (tcp_ooo_try_coalesce(sk, skb1,

+ skb, &fragstolen)) {

goto coalesce_done;

}

p = &parent->rb_right;

--

cgit 1.2-0.3.lf.el7

PoC を書く

ソースは毎度のごとく秘密です。愚直に C で TCP 3-way handshake を実装したが、ハマりどころが数点ありなかなか手間取った

PoC 実行時の観察

Vagrant で PoC を実行する VM と、攻撃を受ける VM を作って観察した

- 攻撃を受ける VM は Ubuntu Xenial + Linux Kernel v4.9.35 とした

- たまたまビルド済みのソースがあったので v4.9.35 にしただけ

- perf-tools でトレースしやすいように net/ipv4/tcp_input.c の static 関数から static を外す変更もいれた

問題となる関数を実行できているか?

細工した TCP セグメントを食らう VM で、 perf-tools (funcgraph あるいは functrace) を実行して

が呼び出されることを確認した

tcp_collapse_ofo_queue

vagrant@vagrant:~$ sudo perf-tools/bin/funcgraph -a -m1 tcp_prune_ofo_queue Tracing "tcp_prune_ofo_queue"... Ctrl-C to end. # tracer: function_graph # # TIME CPU TASK/PID DURATION FUNCTION CALLS # | | | | | | | | | | 2140.222909 | 1) ksoftir-16 | | tcp_prune_ofo_queue() { 2140.224447 | 1) ksoftir-16 | # 1482.527 us | } 2140.276967 | 1) ksoftir-16 | + 36.739 us | tcp_prune_ofo_queue(); 2140.334372 | 1) ksoftir-16 | + 26.140 us | tcp_prune_ofo_queue(); 2140.389904 | 1) ksoftir-16 | + 29.224 us | tcp_prune_ofo_queue(); 2140.477459 | 1) ksoftir-16 | + 37.779 us | tcp_prune_ofo_queue(); 2140.530627 | 1) ksoftir-16 | + 22.656 us | tcp_prune_ofo_queue(); 2140.582696 | 1) ksoftir-16 | + 52.027 us | tcp_prune_ofo_queue(); 2140.684221 | 1) ksoftir-16 | + 23.508 us | tcp_prune_ofo_queue(); 2140.825388 | 1) ksoftir-16 | + 51.326 us | tcp_prune_ofo_queue(); 2140.875775 | 1) ksoftir-16 | + 37.501 us | tcp_prune_ofo_queue(); 2140.932141 | 1) ksoftir-16 | + 64.618 us | tcp_prune_ofo_queue(); 2141.017871 | 1) ksoftir-16 | + 31.709 us | tcp_prune_ofo_queue(); 2141.073205 | 1) ksoftir-16 | + 40.137 us | tcp_prune_ofo_queue(); 2141.122228 | 1) ksoftir-16 | + 27.673 us | tcp_prune_ofo_queue(); 2141.185288 | 1) ksoftir-16 | + 19.458 us | tcp_prune_ofo_queue(); 2141.274617 | 1) ksoftir-16 | + 29.757 us | tcp_prune_ofo_queue(); 2141.330583 | 1) ksoftir-16 | + 37.548 us | tcp_prune_ofo_queue(); 2141.416376 | 1) ksoftir-16 | + 40.630 us | tcp_prune_ofo_queue(); 2141.479812 | 1) ksoftir-16 | + 37.375 us | tcp_prune_ofo_queue();

tcp_prune_ofo_queue

vagrant@vagrant:~$ sudo perf-tools/bin/funcgraph -a -m1 tcp_collapse_ofo_queue Tracing "tcp_collapse_ofo_queue"... Ctrl-C to end. # tracer: function_graph # # TIME CPU TASK/PID DURATION FUNCTION CALLS # | | | | | | | | | | 2257.227959 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2257.337183 | 1) ksoftir-16 | @ 109177.3 us | } 2257.372432 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2257.488423 | 1) ksoftir-16 | @ 115734.3 us | } 2257.489441 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2257.639130 | 1) ksoftir-16 | @ 149521.3 us | } 2257.640056 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2257.785587 | 1) ksoftir-16 | @ 112317.8 us | } 2257.786831 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2257.982993 | 1) ksoftir-16 | @ 196142.4 us | } 2257.985520 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.128075 | 1) ksoftir-16 | @ 142536.9 us | } 2258.131095 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.276400 | 1) ksoftir-16 | @ 145283.7 us | } 2258.276957 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.388536 | 1) ksoftir-16 | @ 111559.1 us | } 2258.389115 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.523263 | 1) ksoftir-16 | @ 133975.7 us | } 2258.523490 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.587686 | 1) ksoftir-16 | * 64186.72 us | } 2258.589001 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.691870 | 1) ksoftir-16 | @ 102819.5 us | } 2258.723109 | 1) ksoftir-16 | | tcp_collapse_ofo_queue() { 2258.874353 | 1) ksoftir-16 | @ 146088.5 us | }

tcp_collapse_ofo_queue の方が 処理時間が長いかな. O(N2) って書いてあったからなぁ

netstat -s の統計

netstat -s の統計を見ていると 👈 の数値が上昇する

Every 0.2s: netstat -s | grep -i -e tcp -e queue Fri Oct 5 03:30:59 2018

Tcp:

TcpExt:

3790 packets pruned from receive queue because of socket buffer overrun 👈

3790 packets dropped from out-of-order queue because of socket buffer overrun 👈

1 packets directly queued to recvmsg prequeue.

46 other TCP timeouts

2105538 packets collapsed in receive queue due to low socket buffer 👈

TCPBacklogDrop: 65384

TCPRetransFail: 3

TCPRcvCoalesce: 8

TCPOFOQueue: 19501 👈

TCPChallengeACK: 1

TCPSYNChallenge: 1

TCPSpuriousRtxHostQueues: 465

TCPAutoCorking: 1871

TCPWantZeroWindowAdv: 31183 👈

TCPSynRetrans: 15

TCPOrigDataSent: 46879

TCPHystartTrainDetect: 3

TCPHystartTrainCwnd: 56

カーネルをアップデートできていないホストで攻撃を受けてしまった場合の監視・調査に役だつだろう

📝

netstat -s が計算している数値に対応するカーネルの LINUX_MIB_**** の enum は以下の4つが対応する.

- LINUX_MIB_OFOPRUNED

- LINUX_MIB_RCVPRUNED

- LINUX_MIB_TCPRCVCOLLAPSED

- LINUX_MIB_TCPOFOQUEUE

- LINUX_MIB_TCPWANTZEROWINDOWADV

ソースを読む際のヒントです

CPU 使用率

PoC を書いて試していたが、RedHat の記事に書いてたように全部の CPU が 100% にならないなーと思って、softirq を分散させる方法を調べ直したりした。感謝です!

で、良い感じに複数の ksoftirqd が si = software inttruput で 100% に張り付いたのが こちらのスクショ

上記はたまたま 3つの ksfotirqd が 100% になる瞬間を手動でコントロールしつつ狙ったもので、全てを自動化して DoS にするのは (私には) 難しい。素朴な実装では 10数秒間ほどしか DoS が続かない。細工したTCP セグメントを送りつける量を適宜コントロールしたり、相手に DROP されないように正規のやりとりを偽装して TCP セグメントを送り続ける必要があるようだ。

( 2-3分ほど ksoftirqd の CPU を奪える PoC になった時点で少しモチベーションが落ちてしまったので、続きを頑張るかはわからない )

その他

任意の TCP ポートを狙うことができるので sshd や nginx, apache に投げつけて検証を進めていた

Apache2 だとこんなログになる

vagrant@vagrant:~$ sudo tail -F /var/log/apache2/access.log 192.168.30.20 - - [06/Oct/2018:14:56:29 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [06/Oct/2018:14:56:43 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:29:41 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:29:49 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:30:21 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:30:33 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:31:33 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:32:02 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:32:12 +0000] "-" 408 0 "-" "-" 192.168.30.20 - - [07/Oct/2018:01:32:27 +0000] "-" 408 0 "-" "-"

TCP ポートが開いていれば攻撃を受けうるので HTTP 以外のプロトコルでも要注意 🔥

感想

- 問題の関数を呼び出す PoC は書けたが、exploit としてはあまり効率がよくない。 まだまだ改良(?☠️ ? ) の余地がありそう

- TCP セッションの扱いで理解が及んでない箇所があるので、もうちょい研究したい CVE